You are browsing unreleased documentation.

This guide walks you through setting up the AI Proxy Advanced plugin with Anthropic.

For all providers, the Kong AI Proxy Advanced plugin attaches to route entities.

It can be installed into one route per operation, for example:

- OpenAI

chat route

- Cohere

chat route

- Cohere

completions route

Each of these AI-enabled routes must point to a null service. This service doesn’t need to map to any real upstream URL,

it can point somewhere empty (for example, http://localhost:32000), because the plugin overwrites the upstream URL.

This requirement will be removed in a later Kong revision.

Prerequisites

Provider configuration

After creating an Anthropic account and purchasing a subscription, you can then create an

AI Proxy Advanced route and plugin configuration.

Set up route and plugin

Create the route:

curl -X POST http://localhost:8001/services/ai-proxy-advanced/routes \

--data "name=anthropic-chat" \

--data "paths[]=~/anthropic-chat$"

Enable and configure the AI Proxy Advanced plugin for Anthropic, replacing the <anthropic_key> with your own API key.

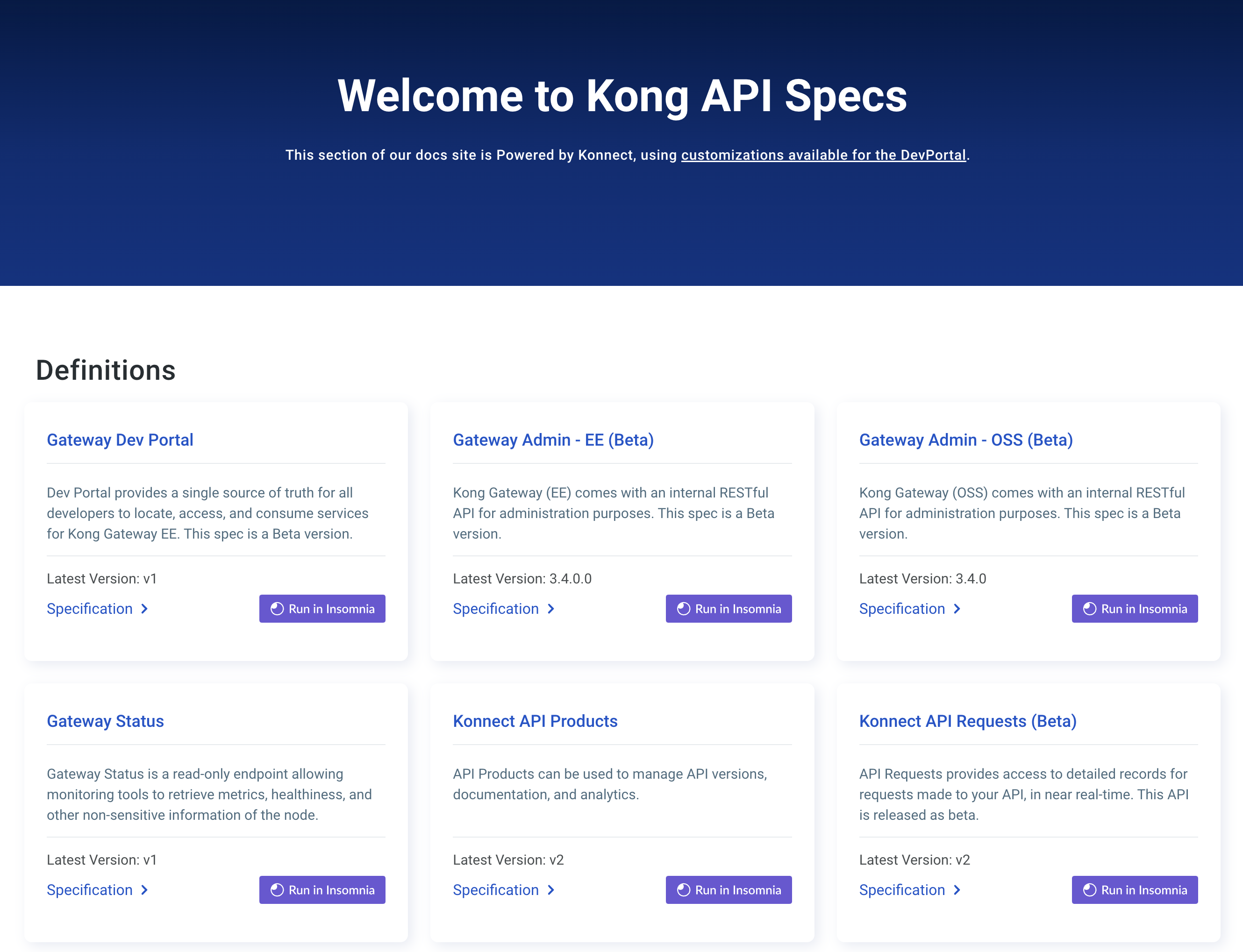

Kong Admin API

Konnect API

Kubernetes

Declarative (YAML)

Konnect Terraform

Make the following request:

curl -X POST http://localhost:8001/routes/{routeName|Id}/plugins \

--header "accept: application/json" \

--header "Content-Type: application/json" \

--data '

{

"name": "ai-proxy-advanced",

"config": {

"targets": [

{

"route_type": "llm/v1/chat",

"auth": {

"header_name": "apikey",

"header_value": "<anthropic_key>"

},

"model": {

"provider": "anthropic",

"name": "claude-2.1",

"options": {

"max_tokens": 512,

"temperature": 1.0,

"top_p": 256,

"top_k": 0.5

}

}

}

]

}

}

'

Replace ROUTE_NAME|ID with the id or name of the route that this plugin configuration will target.

Make the following request, substituting your own access token, region, control plane ID, and route ID:

curl -X POST \

https://{us|eu}.api.konghq.com/v2/control-planes/{controlPlaneId}/core-entities/routes/{routeId}/plugins \

--header "accept: application/json" \

--header "Content-Type: application/json" \

--header "Authorization: Bearer TOKEN" \

--data '{"name":"ai-proxy-advanced","config":{"targets":[{"route_type":"llm/v1/chat","auth":{"header_name":"apikey","header_value":"<anthropic_key>"},"model":{"provider":"anthropic","name":"claude-2.1","options":{"max_tokens":512,"temperature":1.0,"top_p":256,"top_k":0.5}}}]}}'

See the Konnect API reference to learn about region-specific URLs and personal access tokens.

First, create a KongPlugin

resource:

echo "

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: ai-proxy-advanced-example

plugin: ai-proxy-advanced

config:

targets:

- route_type: llm/v1/chat

auth:

header_name: apikey

header_value: "<anthropic_key>"

model:

provider: anthropic

name: claude-2.1

options:

max_tokens: 512

temperature: 1.0

top_p: 256

top_k: 0.5

" | kubectl apply -f -

Next, apply the KongPlugin resource to an ingress by annotating the ingress as follows:

kubectl annotate ingress INGRESS_NAME konghq.com/plugins=ai-proxy-advanced-example

Replace INGRESS_NAME with the name of the ingress that this plugin configuration will target.

You can see your available ingresses by running kubectl get ingress.

Note:

The KongPlugin resource only needs to be defined once

and can be applied to any service, consumer, or route in the namespace. If you

want the plugin to be available cluster-wide, create the resource as a

KongClusterPlugin instead of KongPlugin.

Add this section to your declarative configuration file:

plugins:

- name: ai-proxy-advanced

route: ROUTE_NAME|ID

config:

targets:

- route_type: llm/v1/chat

auth:

header_name: apikey

header_value: "<anthropic_key>"

model:

provider: anthropic

name: claude-2.1

options:

max_tokens: 512

temperature: 1.0

top_p: 256

top_k: 0.5

Replace ROUTE_NAME|ID with the id or name of the route that this plugin configuration will target.

Prerequisite: Configure your Personal Access Token

terraform {

required_providers {

konnect = {

source = "kong/konnect"

}

}

}

provider "konnect" {

personal_access_token = "kpat_YOUR_TOKEN"

server_url = "https://us.api.konghq.com/"

}

Add the following to your Terraform configuration to create a Konnect Gateway Plugin:

resource "konnect_gateway_plugin_ai_proxy_advanced" "my_ai_proxy_advanced" {

enabled = true

config = {

targets = [

{

route_type = "llm/v1/chat"

auth = {

header_name = "apikey"

header_value = "<anthropic_key>"

}

model = {

provider = "anthropic"

name = "claude-2.1"

options = {

max_tokens = 512

temperature = 1.0

top_p = 256

top_k = 0.5

}

}

} ]

}

control_plane_id = konnect_gateway_control_plane.my_konnect_cp.id

route = {

id = konnect_gateway_route.my_route.id

}

}

Test the configuration

Make an llm/v1/chat type request to test your new endpoint:

curl -X POST http://localhost:8000/anthropic-chat \

-H 'Content-Type: application/json' \

--data-raw '{ "messages": [ { "role": "system", "content": "You are a mathematician" }, { "role": "user", "content": "What is 1+1?"} ] }'