Installation Options

Kong Gateway is a low-demand, high-performing API gateway. You can set up Kong Gateway with Konnect, or install it on various self-managed systems.

Set up your Gateway in under 5 minutes with Kong Konnect:

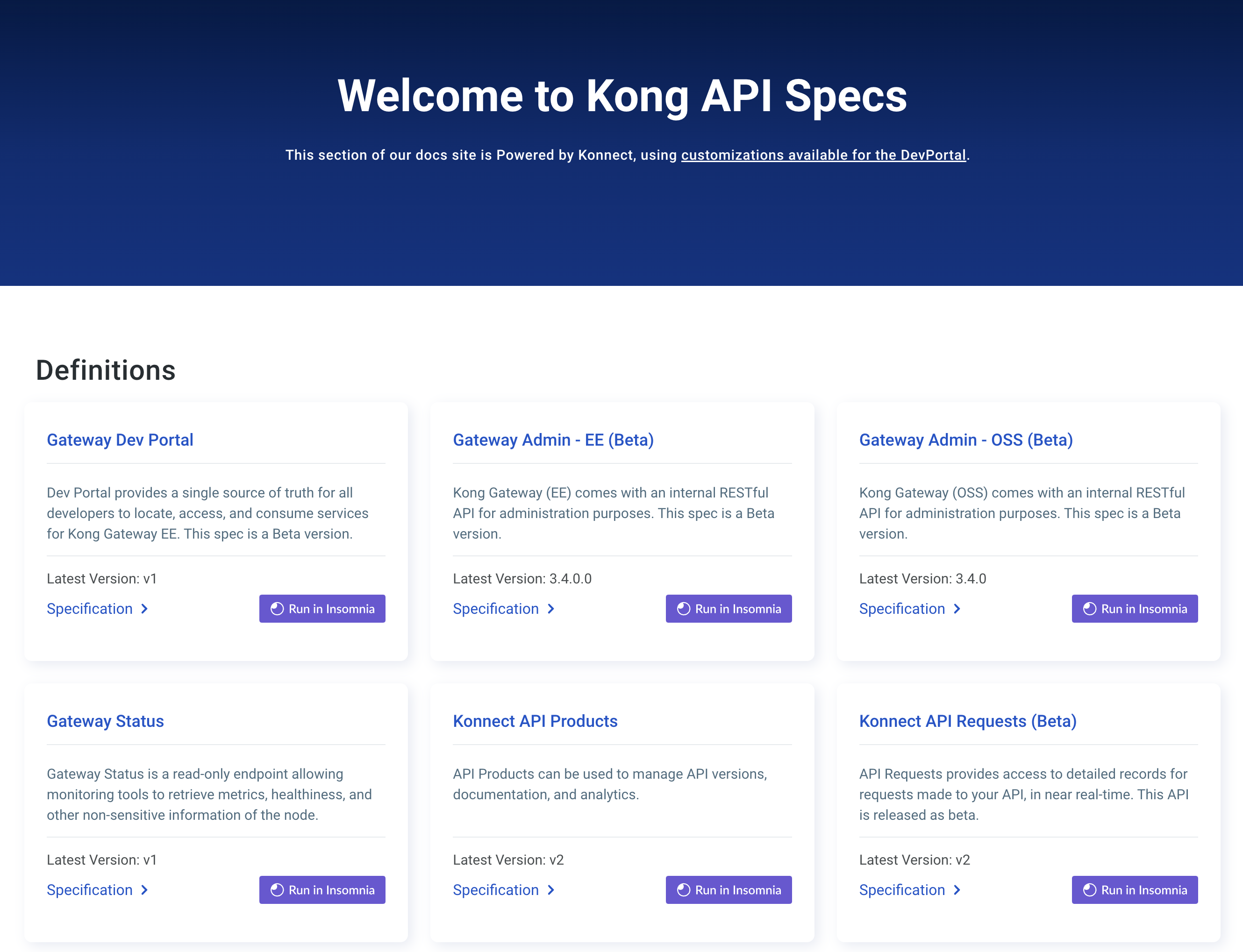

Kong Konnect is an API lifecycle management platform that lets you build modern applications better, faster, and more securely.

Or you can set up Kong Gateway on your own self-managed system: